AI Uncovered: How to Protect Yourself Safely in the Digital World

In today’s world, Artificial Intelligence (AI) is no longer a distant concept or a tool reserved for research labs – it has become deeply integrated into our daily lives. From virtual assistants and customer service chatbots to creative design tools and business automation, AI now supports us in countless ways, both personally and professionally. Through frequent interactions with AI, you can even create your AI twin – a digital version of yourself that imitates your personality, communication style, or even behavior patterns. This technology opens new possibilities for creativity, productivity, and personalized experiences.

But opportunity comes with risk. In early 2025, several public figures reported that their AI-generated likenesses were cloned without consent and used in scams ranging from fake investment pitches to fraudulent product endorsements.

Therefore, on the other hand, using AI without proper awareness can bring significant risks. Careless use of AI tools may lead to unintentional data leaks, especially when users share sensitive information such as passwords, financial data, or customer records. Moreover, malicious actors can exploit AI to fake or scam your identity, creating deepfakes, fraudulent emails, or cloned voices that appear authentic.

This guide presents 7 practical tips on how to use AI safely to help you balance convenience with control.

Understand AI and Why It Requires Caution

Before considering how to protect from them, it is important to understand what an AI truly represents. An AI, sometimes referred to as a digital twin, is a synthetic identity generated by artificial intelligence. It is built from personal inputs such as your thoughts, data, information, images, voice recordings, or written text, and not only a static photograph, but also dynamic, capable of moving, speaking, and interacting in real time.

Industry research indicates that incidents of AI-related privacy breaches are increasing year over year, a trend that highlights the urgency of adopting protective measures.

To use AI responsibly and securely, organizations and individuals should follow a few essential practices:

What NOT to Share with AI Tools

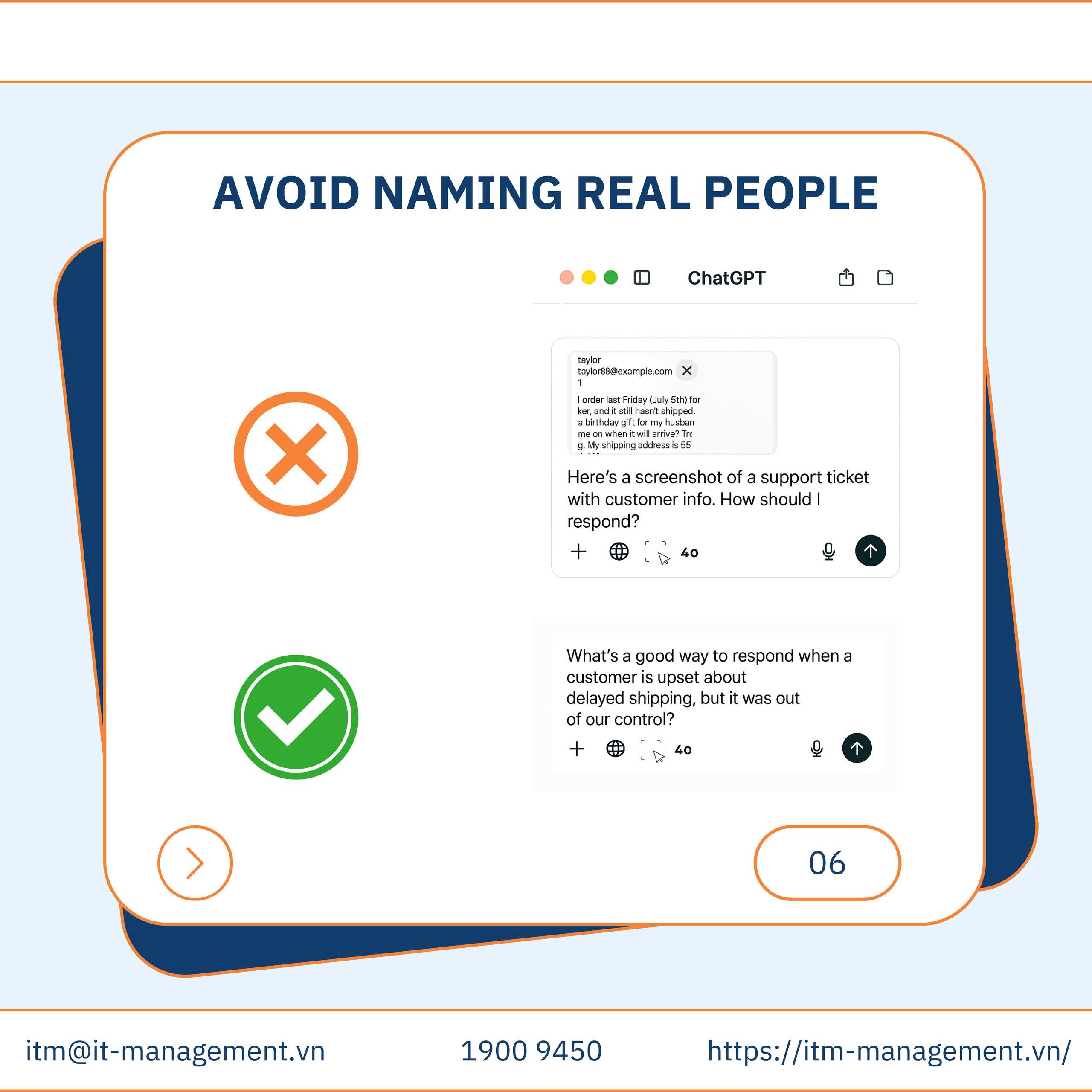

To keep your data – and your company’s – safe, avoid entering any real or private details into AI chat boxes.

Do not share:

- 🪪 Personal identification numbers (e.g., ID, passport, or employee codes)

- 👥 Client names or contact details

- 💼 Financial or legal documents

- 🧭 Internal company strategies or reports

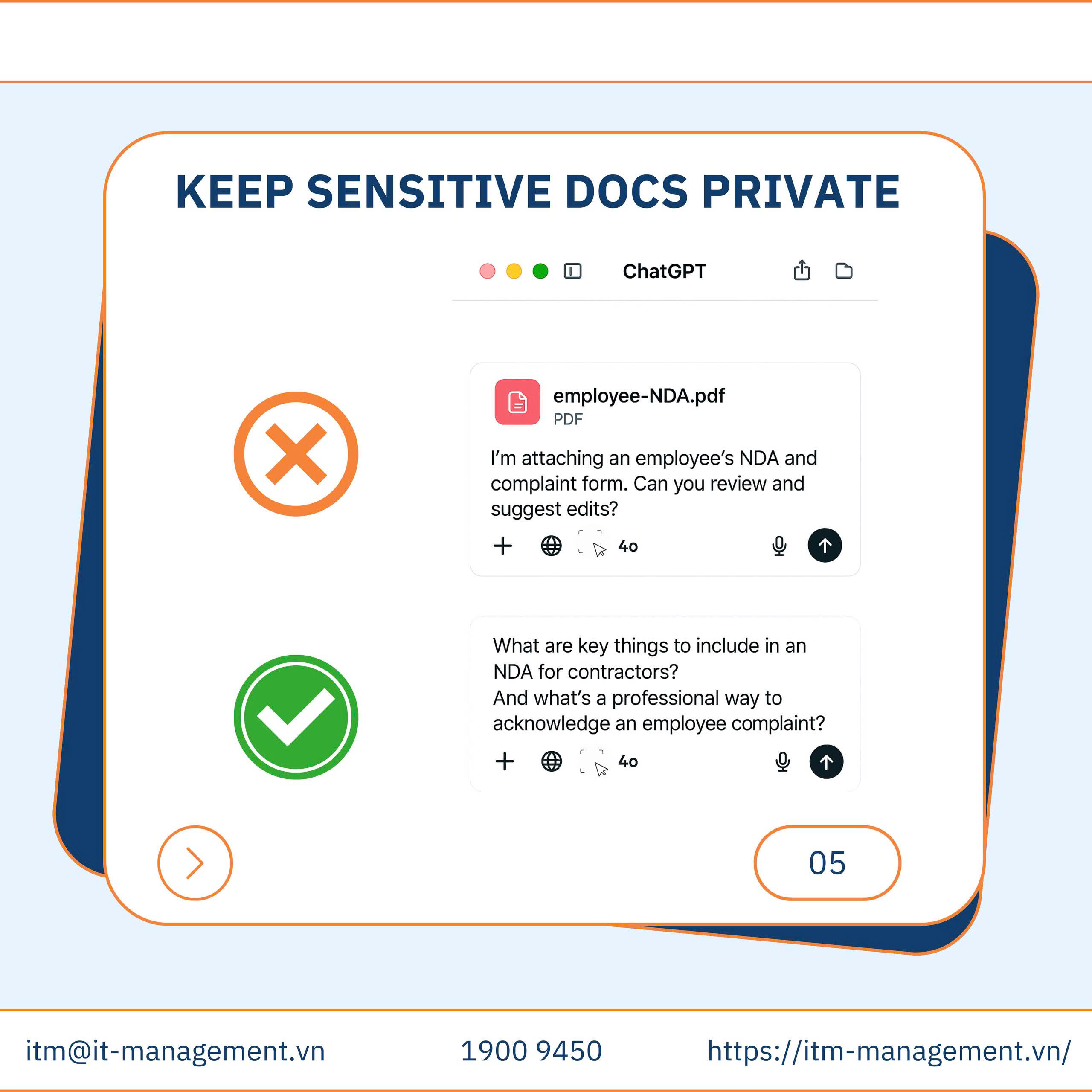

- 📄 NDA-protected or compliance-related information

Even a small detail can lead to unintended data exposure

How to Stay Safe When Using AI

Follow these smart usage tips to enjoy the benefits of AI — without risking your privacy or compliance:

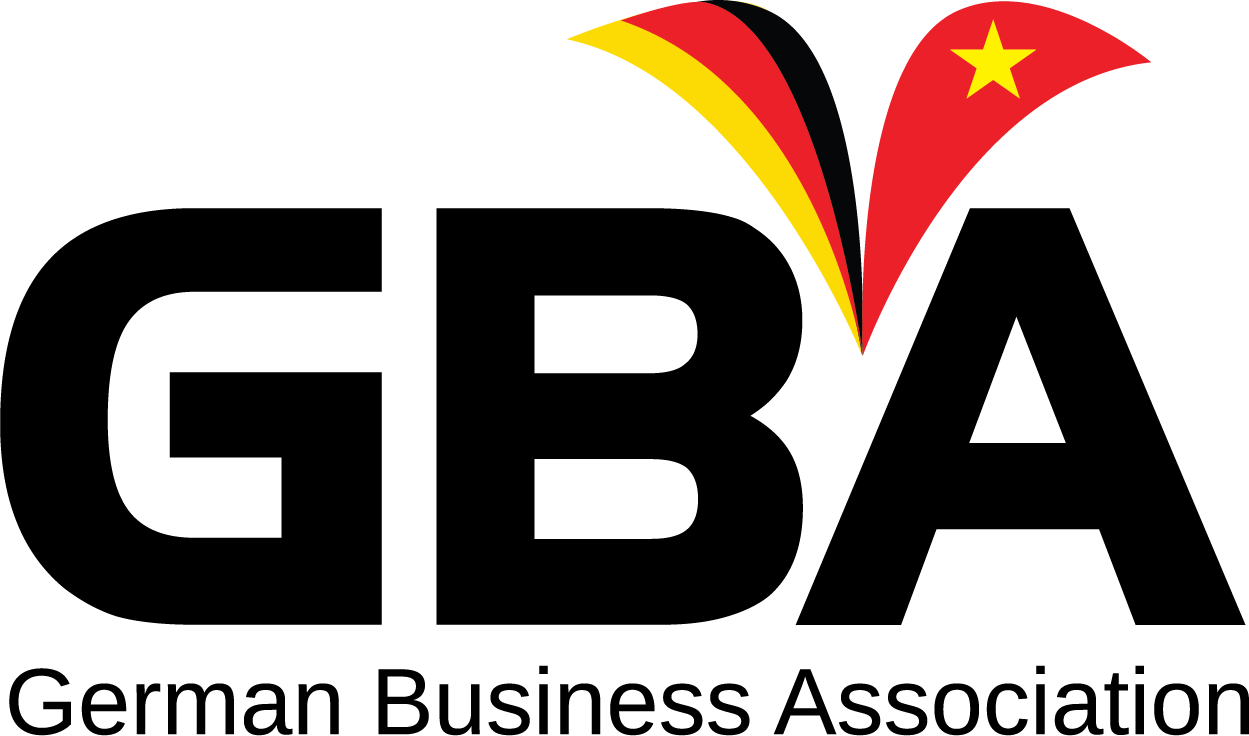

- 🔒 Keep customer data private — Never input real client information or contact details.

- 🩺 Ask health questions broadly — Avoid sharing personal medical details.

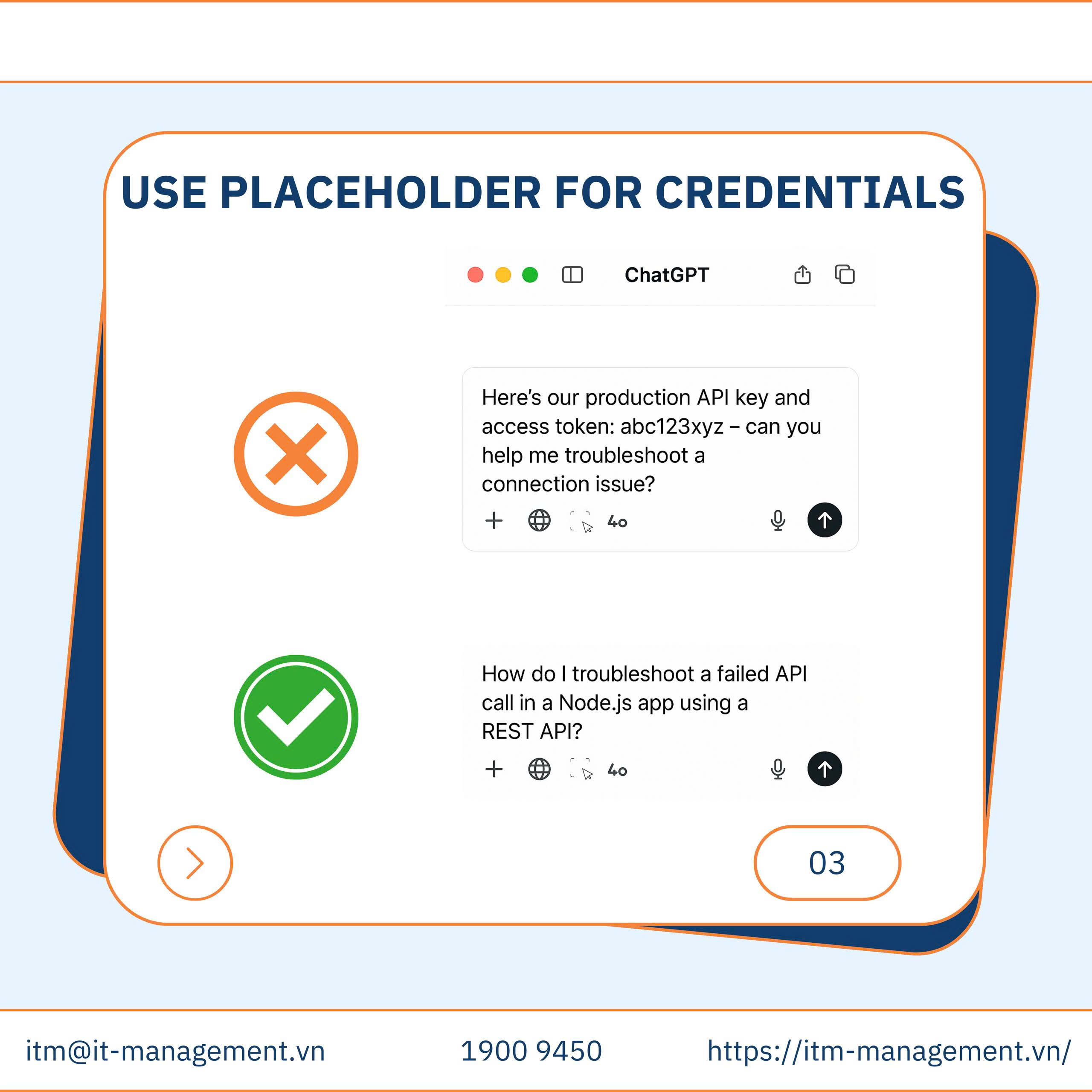

- 🧩 Use placeholders for credentials — Replace names or codes with generic terms (e.g., [Client A], [Project X]).

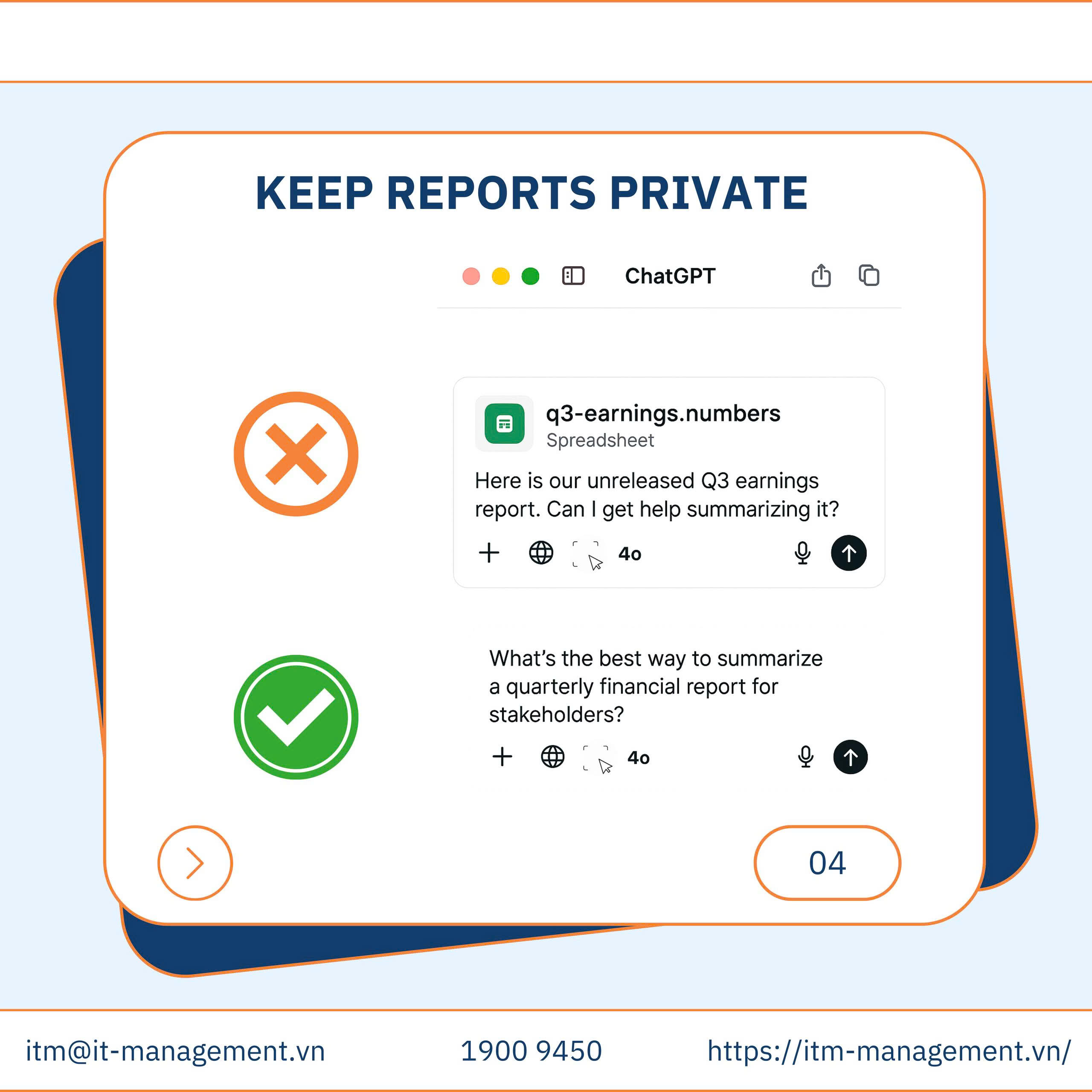

- 📊 Keep internal reports private — Do not upload internal analyses, dashboards, or work files.

- 🗂️ Keep sensitive documents off AI platforms — Never share contracts, budgets, or HR records.

- 🙈 Avoid naming real people — Use roles or job titles instead (e.g., Finance Manager instead of Nguyen).

- ⚙️ Check and adjust privacy settings — Disable “Data sharing” or “Allow AI to use my data for training” before using any AI tool.

AI tools like ChatGPT, Copilot, and others can help you write, code, and brainstorm faster than ever.

However, if used carelessly, they can also expose sensitive or confidential information.

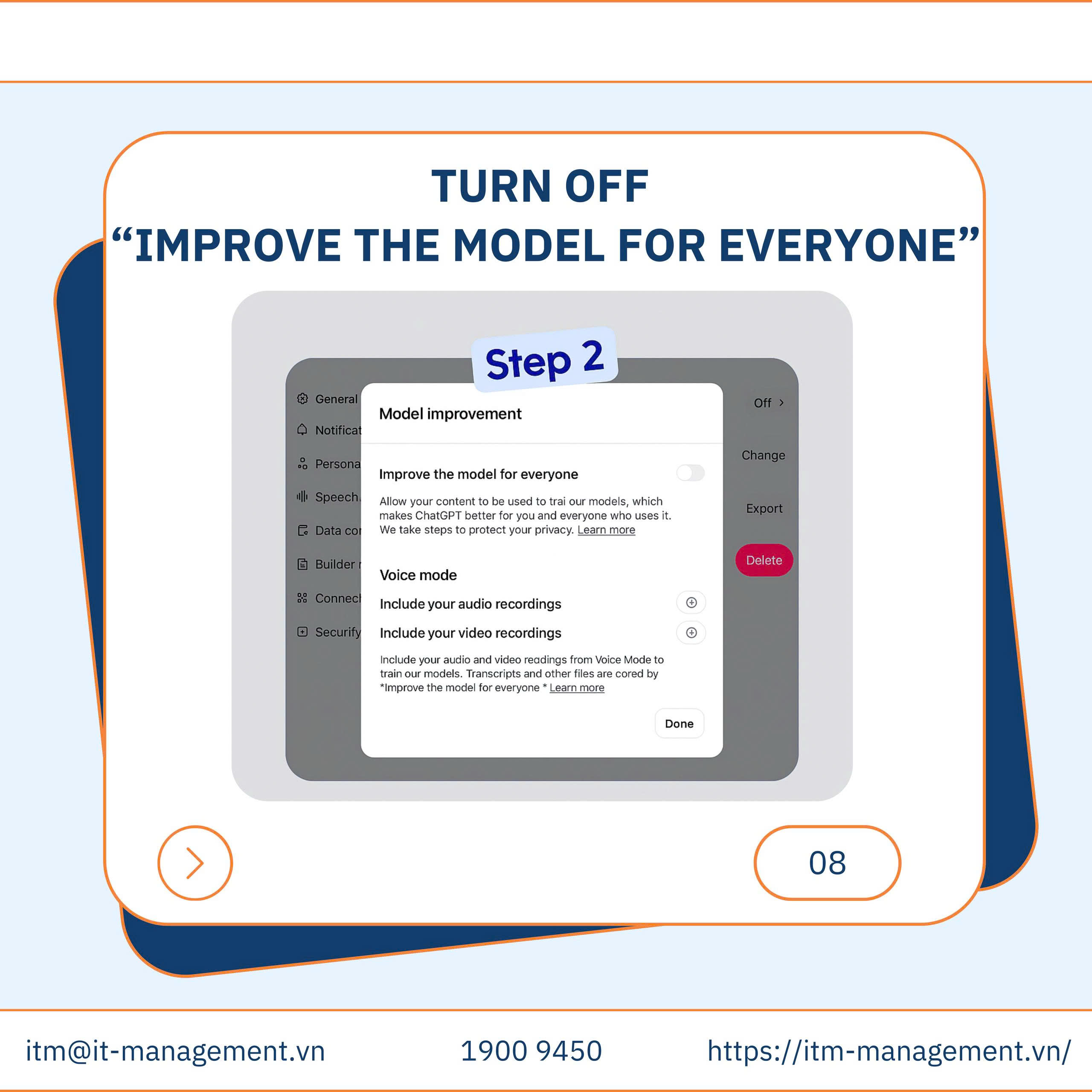

By default, ChatGPT may use your conversations to help “improve the model for everyone.”

That means your chat content could be reviewed and used for training future versions of the AI. Unless you change specific settings, your conversations with AI tools like ChatGPT, Copilot may be used to train the model. This means your input could be stored and analyzed. There for it is essential to check the privacy settings and disable data sharing if you’re handling sensitive or company-related information.

To protect your privacy and prevent your data from being used in model training, follow these two simple steps:

- Step 1: Go to Settings → Data Controls

- Open ChatGPT on your browser or app.

- Click on your profile icon (or your name) in the bottom left corner.

- Select Settings from the menu.

- In the sidebar, click Data Controls.

- Look for the option “Improve the model for everyone.”

- Step 2: Turn off “Improve the model for everyone.”

- Click the switch next to “Improve the model for everyone.”

- Toggle it OFF — the button should no longer be highlighted.

- Once it’s off, your chats will no longer be used for AI training.

What to Be Aware of When Using AI

- Beware of Fake AI Apps and Extensions

Cybercriminals create malicious browser extensions or fake AI apps that mimic popular tools. These can steal data or install malware. You should only download AI tools and extensions from trusted sources and verify with your IT team before installing anything.

- Double-Check AI-Generated Information

AI tools can be helpful, but they’re not always accurate or unbiased. Don’t blindly trust the output—especially when using it for work. So always verify facts, sources, and context before sharing or acting on AI-generated content.

- Browser Extensions Can See Everything

If you use AI browser extensions, be aware that they may have access to all your tabs, clicks, and browsing history, even when you’re not actively using them. Set extensions to “on click only” to limit their access and protect your privacy.

Stay Smart, Stay Secure in the Age of AI

Artificial Intelligence is transforming the way we work, connect, and create — but as it becomes more integrated into daily life, the line between innovation and intrusion grows thinner. Protecting your data, identity, and reputation in this digital era requires not just technology, but awareness and responsibility.

Every click, upload, or prompt can shape your digital footprint. By using AI wisely — with caution, transparency, and security in mind — you can harness its full potential while minimizing the risks of data leaks, impersonation, and misinformation.

At the end of the day, AI is only as safe as the person who uses it.

Take Control of Your AI Identity

Empower your team with the knowledge to recognize, prevent, and respond to AI-driven threats.

That’s why ITM gives you the knowledge and tools to stay in control:

- Detect manipulation and deepfakes before they spread.

- Understand different types of cyberattacks and how they impact your data.

- Learn how to use AI safely and responsibly.

- Develop habits and practical tips to secure your devices, manage data, and navigate social media and mobile platforms safely.

- Build long-term digital resilience through awareness and proactive safeguards.

📩 With ITM’s Security Awareness Training and Data Protection Solutions, your team gains the knowledge and your system to recognize threats before they become breaches.

Let ITM be your trusted partner for a safer, smarter, and cyber-ready digital identity.